The misuse of copyrighted music by artificial intelligence companies could exploit musicians, a former executive at a leading tech startup has warned.

The technology is trained on a huge number of existing songs, which it uses to generate music based on a text prompt.

Copyrighted work is already being used to train artificial intelligence models without permission, according to Ed Newton-Rex, who resigned from his role leading Stability AI’s audio team because he didn’t agree with the company’s opinion that training generative AI models on copyrighted works is “fair use” of the material.

Mr Newton-Rex told Sky News that his issue is not so much with Stability as a company as it is with the generative AI industry as a whole.

“Everyone really adopts this same position and this position is essentially we can train these generative models on whatever we want to, and we can do that without consent from the rights holders, from the people who actually created that content and who own that content,” he said.

Newton-Rex added that one of the reasons large AI companies do not agree deals with artists and labels is because it involves “legwork” that costs them time and money.

Emad Mostaque, co-founder and chief executive of Stability AI, said that fair use supports creative development.

Fair use is a legal clause that allows copyrighted work to be used without the owner’s permission for specified non-commercial purposes like research or teaching.

Stability’s audio generator, Stable Audio, gave musicians the option to opt out of their pool of training data.

The company has received 160 million opt-out requests since May 2023.

Millions of AI generated songs are being created online every day, and big name artists are even signing deals with technology giants to create AI music tools.

Read more:

Britain’s musicians facing existential career crisis

Schools urged to teach children how to use AI from age of 11

Tech giants like Google, YouTube and Sony are launching AI tools that allow anyone to generate music based on a text prompt.

Artists have agreed for their work to be used in these models, but there has been an influx of AI generators that are thought to have scraped music without the creator’s consent.

Bad Bunny, the Grammy award-winning singer from Puerto Rico, was the latest in a series of established artists to criticise the use of his voice without his consent in an AI-generated song that went viral in November.

He told his 20 million WhatsApp followers to leave if they liked “this s****y song that is viral on TikTok … I don’t want you on tour either.”

Boomy, an AI music generator that claims it does not use copyrighted work, said more than 18 million songs were produced using the platform as of November.

The human artistry campaign, which represents music associations from across the world, has called for regulations to protect copyright and ensure artists are given the option to licence their work to AI companies for a fee.

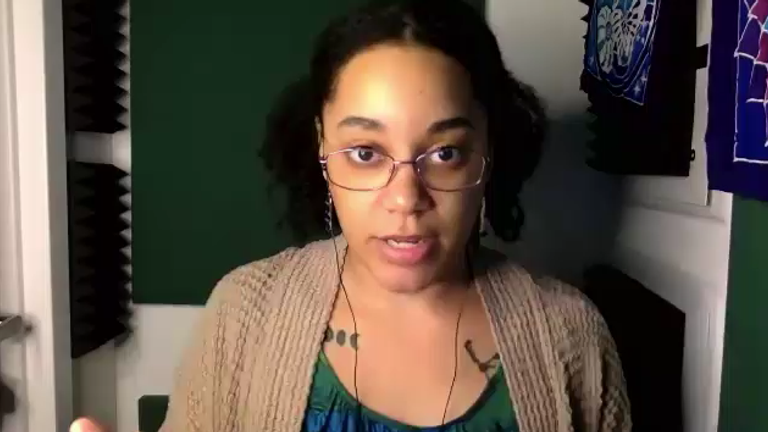

Moiya McTier, senior adviser to the campaign, said: “When artists’ work is used in these models, those artists have to be credited and compensated if they have given their consent to be used in these models.”